Claude, everyone’s favorite LLM, has been playing Pokemon. Veterans of this blog may know that I have a big soft spot for Pokemon as an AI benchmark, and am glad that Anthropic apparently does too.

Veterans of this blog will also know that according to my testing, AIs aren’t that good at Pokemon. They only do so-so answering complex hypothetical questions, and utterly fail at waging competitive battles. This is somewhat surprising, because:

Pokemon is the most valuable media property in the world, so data about it is all over the internet.

Pokemon is deterministic and consistent; for example, water always is super effective against fire.

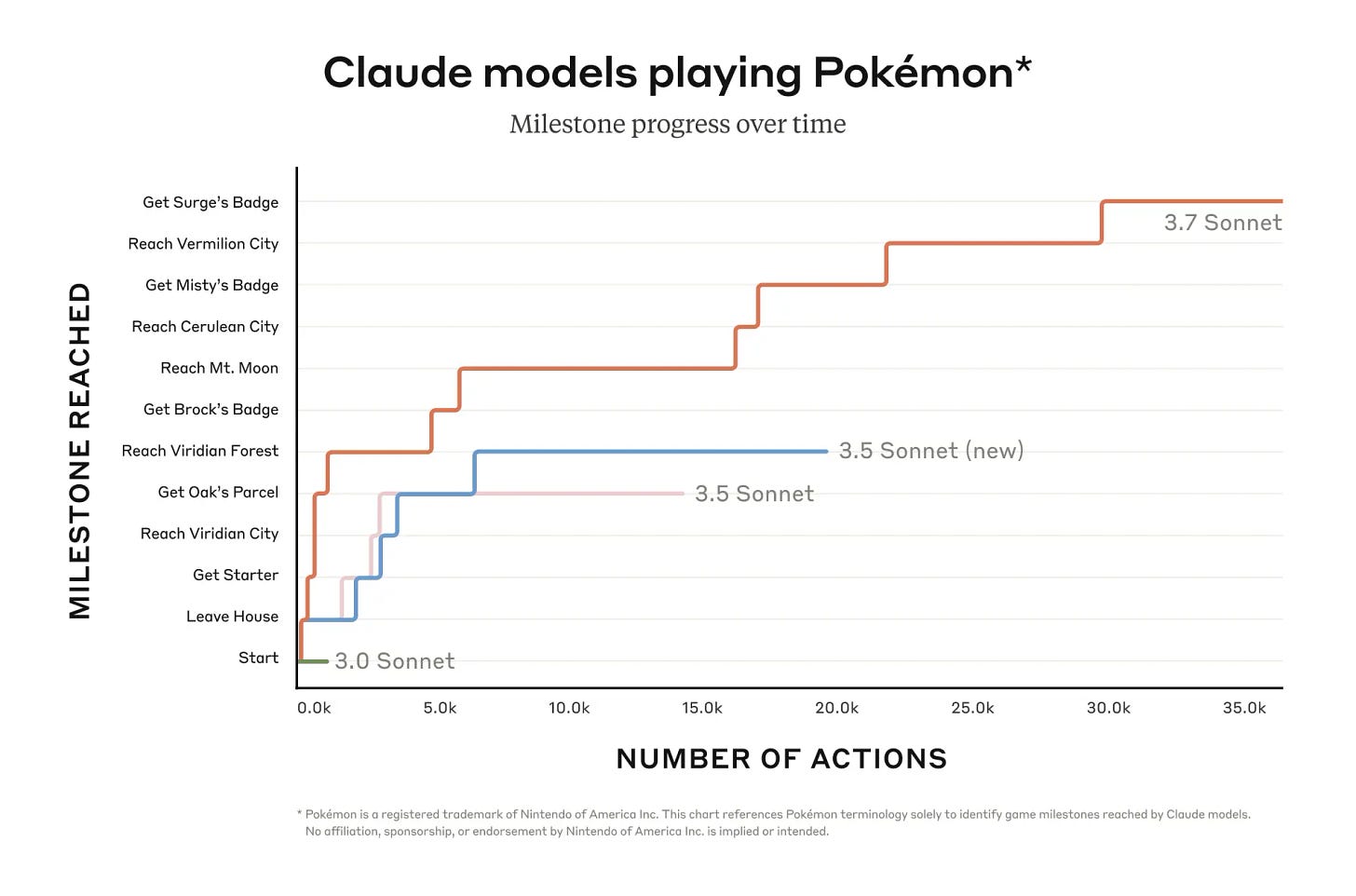

Still, new generations of AI come out just about every month these days, and we can see from Anthropic’s graph that there is Pokemon progress. Furthermore, this new benchmark isn’t answering tricky technical or competitive Pokemon questions; it’s just playing the game. A talented 7-year-old can do that.

Can Claude?

The Interface

LLMs take text in and spit text out. Some can also take in images, which comes in handy for a game like Pokemon, which appears on a screen. So, to play Pokemon (specifically, Pokemon Red, one of the original Pokemon titles), Claude can be fed a bunch of text related to the game state, plus a picture of the screen, and spit out some text corresponding to instructions. For example, it can see that it’s in a battle with the cursor hovering over the move it’d like to use, and emit “press the ‘a’ button”. There are a few more bells and whistles, such as:

Claude’s view of the screen is annotated to make it clearer where it’s allowed to go, to compensate for its poor vision

Claude has access to some macros (most notably, navigating to a specific coordinate instead of “press up, press up, press up, press left” etc.)

Claude can directly see certain values in the game’s RAM, such as its (broadly construed) current location, its Pokemon’s health and status, etc. so it doesn’t have to open the menu and check all the time

But basically, the input channel is game information (like a human player might have), and the output channel is specific game commands (like a human player might make).

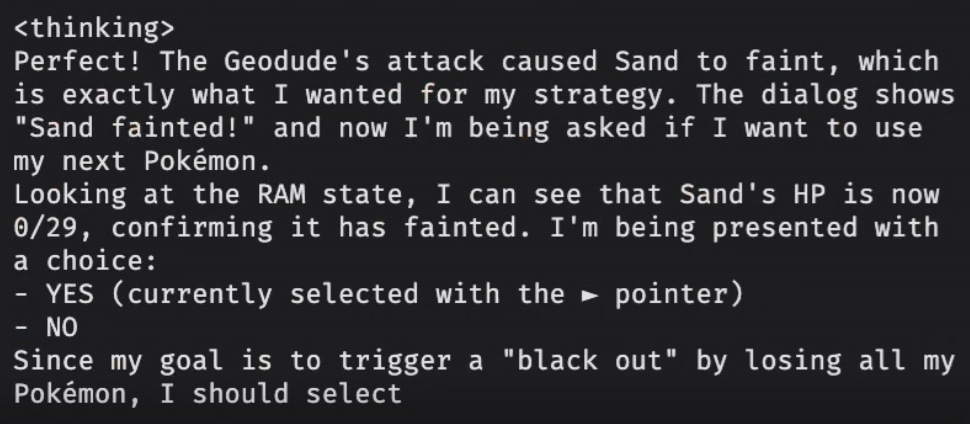

However, in between input and output, Claude has another very valuable tool. Specifically, Claude 3.7 can be used as a thinking model, which generates a bunch of words to represent its train of thought before emitting its actual answer. Indeed, the Claude Plays Pokemon stream actively shows this thinking, so viewers can see the AI’s reasoning. It looks like this:

What’s between the <thinking> tags is Claude’s thoughts. The orange text is its actions. So Claude is dropped into Pokemon Red, given some basic guidelines to try to beat the game, and then it’s simply alone with its own thoughts, with only the game itself as feedback.

Agents and Scaffolds

From the screenshot above, you may notice that Claude generates a fair bit of text for even the smallest decisions. If Claude remembered all of its own internal monologue, its reasoning would soon be drowned out by unimportant minutiae. If, on the other hand, it immediately discarded its thoughts after each step, it would be unable to form and act on short term plans. Since playing a video game often involves hitting several different buttons in a row, Claude needs a mechanism to selectively remember. The solution to this is an agent scaffold.

An agent scaffold is some external store of tooling and memory that an LLM can access, which enables it to take actions and behave consistently over time. If you’ve ever used ChatGPT, a single ChatGPT conversation is a very simple agent scaffold:

The LLM behind ChatGPT has access to the entire history of the conversation, wrapped in decorators that tell it which words came from it and which came from you.

It is given the goal to be a helpful assistant to you, and predicts how best to do that given the conversation so far at every step.

It has the ability to use certain tools (like, draw a picture for you, or search the web), which it deploys selectively in the service of its goal (to help you).

Claude Plays Pokemon has a somewhat more complex agent scaffold. As it moves through the game, it remembers it past several actions. But every so many actions, it consolidates its recent actions into a summary, and stores that summary information in an external file that it can read. It is able to read and write to an arbitrary number of these summary files, to organize the information it obtains in the short sequence of recent actions that it remembers directly.

For example, if Claude has just entered a Pokemon center for the first time, it might open the file corresponding to its knowledge about the town it’s in, and add a line describing the coordinates where the Pokemon center can be found. Claude also tends to maintain files for its big-picture strategy, game progress, goals, and exploration information for whatever area of the game it’s in. It can load and unload these files as needed. So if Claude is finished with, say, Mt. Moon, it can unload all its Mt. Moon files and start using the files for the next section of the game, which is Cerulean City.

The Outcome

First, let’s think for a second. In the Red corner, we have Pokemon Red, a 27-year-old game with hundreds of detailed guides for on the internet. In some sense, Claude has read all of them. In the Blue corner, we have Claude 3.7, broadly considered the best programming AI in the world, able to solve complex logic questions that would stump most computer science graduates. Furthermore, Claude has a complex scaffold to help it keep itself organized (much as it has to during the coding projects it’s known to be good at). This should be easy, right?

It has not been easy.

Claude did make it through the beginning of the game. It lost interest partway through naming its Bulbasaur “Sprout” and settled on “Sprou”, likewise ended up with a Pidgey named “Sand” (it wanted “Sandy”), and it got stuck in several loops in the game’s first hours. It did, however, eke its way forward. Its progress culminated in defeating Brock, earning its first badge out of the 8 needed to beat the game.

Then it made it to Mt. Moon. Where the blackout strategy was born.

Remember how Claude can read and write to arbitrary files, in the service of setting its own intermediate goals? Well, Mt. Moon is a small but confusing dungeon, and Claude spent a while navigating it like a confused 7-year-old might. Lots of excitement when it reached a ladder to a different floor, lots of retreading the same ground, and difficulty remembering which paths had been dead ends.

Still, Claude might have gotten through eventually. Except that at one point, reasonably far in, it blacked out. This is a normal part of Pokemon; you black out when all your Pokemon faint, and you’re sent back to the last Pokemon Center you’ve visited. It’s basically a gentle “game over” state, that lets you try again.

For whatever reason, Claude determined that after the blackout, it respawned past Mt. Moon. There was no reason to believe this, but Claude’s scaffolding kicked in and quickly enshrined this as a belief of great importance. Claude added “cleared Mt. Moon” to its list of achieved goals. Whenever it found itself back in Mt. Moon, it became convinced that it had backtracked, and needed to leave the way it came.

One thing I haven’t mentioned yet is that Claude Plays Pokemon has a “critic” module, that chimes in to let it know if it’s going off the rails. The critic module strongly reinforced that Claude had definitely beaten Mt. Moon, and should be able to advance directly to Cerulean City.

So now, every time Claude enters Mt. Moon, it tries to let all its Pokemon faint on purpose, to allow it to teleport to the Pokemon center and go straight to Cerulean. It has written a strategy document for this purpose, called “mt_moon_blackout_strategy”, which it uses at the expense of every other document it has made. So far Claude has deliberately sacrificed its entire team of Pokemon at least 8 times in a row, without questioning the validity of the blackout strategy itself. Once outside Mt. Moon, it bumps into walls for a while, looking for the clear path to Cerulean it is sure must exist (but doesn’t), then wanders into Mt. Moon and loses on purpose again.

As an example of Claude’s reasoning, when it yet again finds itself in Mt. Moon:

I’ve already verified the correct path to Cerulean City… which goes from Route 4 => Route 3 => Route 4 (North) => Cerulean City. Since I’m already in Mt. Moon and can’t find a direct exit, my best option now would be to deliberately lose a battle to be transported back to the last Pokemon Center I visited…

(The Route 4 => Route 3 => Route 4 (North) => Cerulean City bit is totally wrong. But Claude considers it “confirmed” and will not let it go.)

Why?

Some people have been quick to call “misalignment”, and declare that Claude’s decision to repeatedly let all its Pokemon faint is ominous. I don’t think so, though it is pretty funny.

Others have been eager to add more bells and whistles to Claude Plays Pokemon’s implementation, to help it avoid this specific pitfall or others like it. But what I haven’t told you is that this was actually the second streamed Pokemon run that Claude Plays Pokemon attempted. The first fell into a similar (but different) degenerate loop, after completing Mt. Moon. The remedy was increased scaffolding. That remedy seems to have failed.

In fact, I think the blackout strategy phenomenon is a tidy expression of the weaknesses of agent scaffolding in general. It’s tempting to think that you can use LLMs as a “core” of a broader system, where native text-prediction capabilities handle cognition, and other systems handle memory retrieval, plan calcification, etc. And indeed, this does work for some cases, some of the time.

But really, Claude Plays Pokemon (and the blackout strategy) is a tale as old as time: greedy hill-climbing. Claude will bop along through the game for a while, but at every step there’s always some chance that it ends up having some misapprehension that gets it stuck in a loop. It might start believing that it needs to go South when it really has to go North, or that it needs to find an underground passage that doesn’t exist, or that the Cerulean City bike shop is an important hub that connects different parts of the town (all real examples). Error-detection scaffolding will sometimes catch and dispose of these errors, but will other times enshrine them. Basically, Claude will end up in a local maximum at some point, where it has constructed a narrative in its scaffold that tells it to do one thing in particular, and the outcome of that thing will be ambiguous enough that it never changes its mind.

Every blackout, Claude celebrates, because it has woken up at the Pokemon Center, which is has confirmed is past Mt. Moon. Another win for the blackout strategy!

The Solution

The solution is a better underlying model. Of course, it’s possible for scaffolding to get you there, for a goal like playing Pokemon. As a degenerate case, you could simply tell Claude the exact set of inputs that would beat the game, and have it enact those inputs. But if you care about Claude actually figuring out Pokemon fair and square, you simply need a better Claude.

It’s very tempting, if you’re an AI lab or just an excited layperson, to believe that additional bells, whistles, and augmentations will make a flawed model dramatically stronger. But in my experience, and as a lesson from this specific experiment, scaffolding only gets you so far.

Another lesson is that reality has a surprising amount of detail. Playing Pokemon seems like a very simple activity. But when you start to get a little inhuman guy to do it, you suddenly realize that oh, yeah, NPCs do move before you can talk to them, and you need to consolidate memories at just the right frequency to avoid walking in big circles, and navigator systems won’t naively know you can jump down ledges, and…

Will AI be able to do this someday? I have little doubt. It’s getting there now, which is exciting. But I doubt it will be better scaffolding that gets us there. Probably, we’ll end up beating Pokemon Red the old fashioned way: with billions and billions of dollars, to train a stronger brain.

Notably, since I posted this, Claude has indeed made it through Mt. Moon! I don't think that really invalidates what I'm saying here; Claude's improved navigation/memory systems helped it in some ways relative to its first run (somewhat more efficient exploration), and hurt it in others (it invented the blackout strategy). Mt. Moon took it a little under 3 days the second time, and a little over 3 days the first time. So, improved scaffolding seems to be helping in aggregate... a little bit. But with totally unanticipated specific pitfalls.

Stronger underlying models are the most straightforward solution, but I don't see why scaffolding alone would be insufficient for Pokemon specifically, even without overfitting to Pokemon.

The loops you provided as examples could easily be resolved with just a small amount of grounding. In this case, grounding could be as simple as asking Claude, with minimal context in the prompt, "I am trying to do this/go here, this is my plan. Does this sound like a good plan?". With its base knowledge of Pokemon, it would easily resolve these.

This approach would not work nearly as well for more complex questions, or niche content (would be more difficult to avoid context contamination), but it would still cover a broad range of situations. Most hallucinations would not survive a majority vote unless the context is universally contaminated. And there are many other ways to attain ground truth through tool use, e.g., Claude can use code to determine how many r's are in strawberry.

That said, Claude's vision definitely needs improvement, which requires a stronger base model. It also struggles immensely with memory and learning in general, which seems difficult to remedy through scaffolding, but naive scaling also seems questionable as a solution. Regardless, these limitations don't seem significant enough to stop it from handling something as easy as Pokemon.