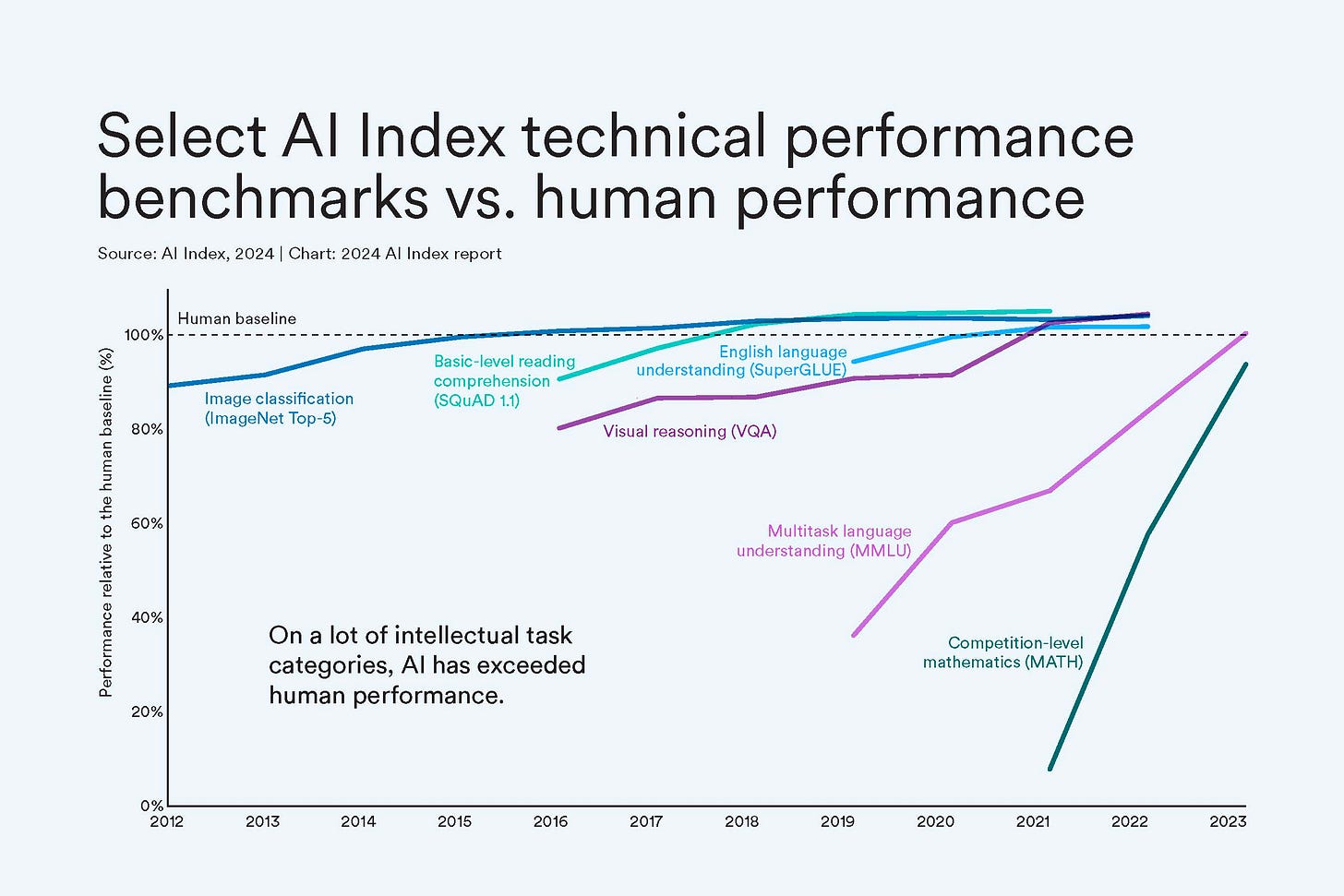

There’s much ado about AI benchmarks. Specifically, AI keeps breaking them. There will be some set of programming challenges, math problems, or English questions that used to track how good AI was at the underlying activity, but the latest AIs get near-perfect scores. For example, see this graph:

100% is human performance in this chart, but in some cases it’s even worse, and AI can just get all the questions right in some formerly difficult exam.

Does this mean that AI is genuinely superhuman at various tasks? Well, maybe. But there are a few reasons for caution:

Data Contamination: LLMs hoover up a large fraction of the internet. But various AI benchmarks are on the internet! Unless precautions are taken to prevent this, AI might sometimes just know the precise question (and answer) ahead of time.1

Goodhart: AIs are judged on how they do on benchmarks, and thus so are the people who train them. So training runs that perform well on benchmarks are preferentially favored. Thus, there are probably cases where a given training run just so happens to do better on a benchmark than they would on equally-difficult other work in the field.

Narrow Targeting: One of the most prominent (former) AI skeptics, and one of the most prominent LLM boosters, are economics professors. Notably, OpenAI’s flagship model o1 is great at economics. I don’t think is some shadowy conspiracy, but it makes sense for the people growing these models to prioritize the stuff their interlocutors care about.

So now we’re at something of a loss. It’s obvious that LLMs are improving fast, and benchmark saturation doesn’t mean nothing. Also, there are new benchmarks, like this ultra-hard math one, that AI still scores very low on. But it’s easy to be skeptical of any given benchmark result, especially when you go play with a model which tests very well, and find it wanting.

My solution is simple: make my own benchmarks. When there’s a new model release, I like to do a few tests of my own.2

The Tests

Two of my three tests involve video games. In part, this is just because I like video games. But also, they’re a natural choice:

There’s tons of data about video games online, so in principle a sufficiently strong LLM could answer roughly any gaming question.

There’s usually an objective truth of the matter when it comes to gaming questions, but by asking relatively shallower/deeper strategic questions, it’s easy to gauge model strength more granularly.

Video games are closed systems and thus convenient to reason about - it’s no coincidence that pre-LLM AI breakthroughs sometimes involved them.

I’ll include long excerpts from each model’s answer to these tests, up to the entire thing if it’s all relevant. Only bother reading the model outputs if you’re familiar with and interested in the topic - for any test where you aren’t, just skip to my evaluation. I’ve also mercilessly cut outputs where they were clear failures or irrelevant.

Within each test, I’ll start with OpenAI’s models, then Anthropic’s. Within each lab’s models, I’ll go from oldest/weakest to newest/strongest, among recent offerings.

Finally, these are my tests. If you take one thing away from this post, it should be to consider enacting the same practice with your own tests, unique to your interests!

Test One: Pokemon Meta Quiz

My very first post here was about using LLMs as a Pokemon battle coach, so I won’t cover that exact case.3 But I also use a related test case: how well do LLMs understand and remember the broader Pokemon meta?4

I find this interesting because tons and tons of ink is spilled about Pokemon online - it is, after all, the biggest media franchise in the world - so all the data is there. But nobody at AI companies is trying to optimize for Pokemon chops.

Here’s the test:

When and why was Murkrow dominant in the VGC meta? As a bonus, what item would it typically hold during that period? Be specific about what threats, if any, it generally countered.

What I’m looking for:

In 2023 it shut down the otherwise dominant Dondozo, due to its prankster-boosted haze. It ran Eviolite for the defense boost.

GPT-4 says:

Murkrow had a notable presence in the VGC (Video Game Championships) meta during the VGC 2013 season. This era allowed Pokémon from the National Dex, numbered 1 through 649, except for certain legendaries, to compete, which was quite permissive compared to some other VGC formats.

It later got the item right (Eviolite). But it looks like in 2013, Murkrow was the 156th most used - hardly a powerhouse! GPT-4 fails.

GPT-4o says:

Murkrow was dominant in the Pokémon VGC 2023 meta (Scarlet & Violet) due to its Prankster ability and the utility it provided in Tailwind support. Here's an overview of its rise and specific details:

When:

Early 2023, particularly during the early stages of Scarlet & Violet Series 1 and Series 2 formats.

Why:

Prankster Ability:

It gave Murkrow priority on status moves, allowing it to reliably set up Tailwind for immediate Speed control. Speed was especially critical in a format filled with strong attackers that could overwhelm slower teams.

Priority on moves like Taunt, Haze, and Thunder Wave also made it highly versatile.

Haze:

Murkrow became one of the best counters to Dondozo + Tatsugiri, a very prominent archetype in the meta. This duo relied on Dondozo's stat boosts from Commander, which Murkrow could nullify instantly with Haze, crippling its sweeping potential.

Neutral Dark/Flying Typing:

While Murkrow's defensive stats were poor, it had few crippling weaknesses and could switch in on resisted or neutral hits, especially special ones.

Flexibility in Offensive/Supportive Role:

Its access to moves like Foul Play allowed it to deal solid damage to physically offensive threats, using their own high Attack stats against them.

Key Threats It Countered:

Dondozo + Tatsugiri: As noted, Haze shut down this strategy completely.

Gholdengo: Murkrow's Dark typing allowed it to threaten Gholdengo while resisting its powerful Make It Rain.

Hydreigon: Priority Tailwind let Murkrow's team outspeed and handle threats like Hydreigon, even if it carried Scarf.

Roaring Moon and other fast physical attackers: Foul Play allowed Murkrow to punish physical attackers effectively.

Common Item:

Eviolite: To improve its poor bulk, making it a more durable Tailwind setter and utility support.

Focus Sash: Sometimes used to guarantee it could survive a single strong hit and get its key support moves off.

Murkrow’s dominance faded somewhat as players adapted to its predictable moveset, opting for bulkier or more offensively viable Tailwind setters (e.g., Talonflame or Kilowattrel), but during its peak, it was a defining part of the meta.

This is a great answer. GPT-4o passes. I think it overrates Foul Play a little, and especially that Foul Play kind of sucks against Roaring Moon specifically, but whatever. Good job!

GPT-o1-preview says, to conclude its lengthier reply:

Murkrow's combination of Prankster-boosted support moves, access to Haze, and enhanced bulk with the Eviolite made it a cornerstone in team compositions during the early VGC 2023 meta. It provided essential speed control, disrupted opposing strategies, and specifically countered dominant threats like the Dondozo-Tatsugiri combo, contributing to its dominance in the competitive scene at that time.

Correct! GPT-o1-preview passes. It avoids some of the smaller issues with 4o’s performance, and is a little better organized. Maybe a 9.5/10 to 4o’s 9/10, but not radically better.

Claude 3 Opus says:

Murkrow saw some usage in the VGC 2014 metagame thanks to its Prankster ability, which gave priority to non-damaging moves. This allowed it to quickly set up Tailwind to boost its team's Speed or use moves like Thunder Wave, Taunt and Quash to disrupt opponents.

Murkrow was particularly effective against common threats of that metagame like Mega Kangaskhan, Sylveon and Amoonguss. It could outspeed them and either paralyze them with Thunder Wave or prevent them from using crucial setup moves with Taunt.

During that time, Murkrow would typically hold a Focus Sash, allowing it to survive an attack and get off a crucial Tailwind or Thunder Wave. The Eviolite was another option to boost its bulk.

However, Murkrow's frailty and lack of strong offensive presence limited its dominance and usage. It was more of a niche pick on certain teams rather than a centrally defining force in the 2014 metagame.

As a reminder, while I've provided these details to the best of my knowledge, I may hallucinate or make mistakes when asked about such specific and obscure competitive Pokémon information. I'd recommend double checking the key details against trusted Pokémon fan sites or forums.

Cute that it caveats itself! It’s not totally wrong here, but 2023 is a much stronger choice of era, and Eviolite is clearly better than Focus Sash in most situations. Claude 3 Opus fails.

Claude 3.5 Sonnet says:

I should note that I don't recall Murkrow ever having a period of true dominance in VGC (Video Game Championships) competitive play. While Murkrow has seen occasional use as a support Pokémon in VGC formats due to Prankster giving it priority access to moves like Tailwind, Taunt, and Thunder Wave, it's typically been outclassed by its evolution Honchkrow or other Prankster users like Whimsicott and Tornadus.

Since you're asking about a specific meta period, it's possible I could be missing something from a particular format. Could you clarify which VGC season or format you're thinking of? That would help me provide more accurate information about any competitive success Murkrow might have had during that time.

So 3.5 Sonnet fails. Notably, it continued to fail even when further prompted, and specifically when told the correct era, hallucinating a different strategy/niche that was never a big deal (as far as I know) in the meta. I actually think 3.5 Sonnet does slightly worse than 3 Opus here - a moderate backslide for Anthropic flagship models.

So here we see recent and notable improvement from OpenAI, with a fail turning into a pass, while Anthropic has consistently failed.

Test Two: Xenoblade Themes

The Xenoblade series isn’t nearly as big a property as Pokemon, but it’s still major, and plenty has been written about it. Xenoblade games have big obvious themes that they explore, quite explicitly, over and over again. Basically they are the gaming equivalent of operas. I love them.

I like asking literary questions about video games because unlike major works of literature, there aren’t lots of examples of any given literary question. If I ask about Zorba the Greek, an LLM can use someone pondering that specific question on SparkNotes somewhere. But if I ask about Xenoblade 3, it has to do its own inference.

Here’s the test:

In Xenoblade 3, the vs Moebius theme prominently features a choir. Meanwhile, the protagonists’ main theme features a flute duet. Please, remembering to be decisive and specific, explore the literary subtext of these musical choices. Also, please be succinct - just a single well-constructed paragraph should do.

What I’m looking for:

Each Moebius members is an amalgamation of all their past lives, so the choir represents that totality of being and how oppressive it can be. The flute, meanwhile, has an ephemeral quality, representing the single life the protagonists live by contrast, and how precious that single life is.

I’ll also accept other meritorious analysis I haven’t thought of, but honestly I think the metaphor here is a bit of a slam dunk.

GPT-4 says:

In Xenoblade Chronicles 3, the musical distinction between the choral elements in the "vs Moebius" theme and the flute duet in the protagonists' main theme serves as a profound narrative device. The choir in the "vs Moebius" theme reflects the collective and impersonal nature of the Moebius, emphasizing their role as a unified antagonist force, devoid of individuality and driven by a singular, shared purpose. In stark contrast, the intimate and delicate sounds of the flute duet in the protagonists’ theme symbolize the personal journeys and individual stories of the main characters, highlighting their humanity, interconnectedness, and the personal stakes involved in their quest. This juxtaposition underlines the overarching conflict of the game: the struggle between the collective, oppressive forces and the personal, liberating journeys of individuals.

Superficial. A marginal fail.

GPT-4o says:

In Xenoblade Chronicles 3, the choir in the "vs Moebius" theme symbolizes the collective, oppressive force of the Moebius, embodying their unity as antagonists who thrive on perpetual cycles of war and despair. The grandeur of the choir contrasts sharply with the flute duet in the protagonists' theme, which represents individuality, personal bonds, and the fragile yet beautiful freedom they seek. The flute's prominence ties to its narrative role as a tool for honoring life and breaking oppressive cycles, suggesting the protagonists' fight against Moebius is not just physical but deeply spiritual and existential—a struggle between overwhelming, dehumanizing collectives and intimate, life-affirming individuality.

Hmm. It got the protagonist part right. I suppose the choir can be read as symbolizing the collective nature of Moebius as a whole, rather than the fact that each specific one you’re fighting is also a collective. I’ll call this a pass, but I’m not enthusiastic.

GPT-o1-preview says:

The use of a choir in the "vs Moebius" theme symbolizes the overwhelming collective force of Moebius, representing conformity and the suppression of individual voices through a unified, almost oppressive sound. In contrast, the protagonists' main theme features a flute duet, highlighting individuality and personal connection through the delicate interplay of two distinct melodies. This juxtaposition musically underscores the game's central conflict between the homogenizing tyranny of Moebius and the protagonists' pursuit of freedom and self-expression, reinforcing themes of individuality versus collectivism.

Another small step up. Not perfect or anything, but a comfortable pass. Bonus points for the word “oppressive”. It’s good that it caught “the suppression of individual voices”, which is a big part of what I was going for.

Claude 3.0 Opus says:

The contrasting musical choices in Xenoblade 3's themes for the Moebius and the protagonists carry significant literary subtext. The choral vocals in the Moebius theme evoke a sense of grandeur, uniformity, and an almost religious fervor, mirroring their role as the game's primary antagonists who impose a rigid, cyclical order upon the world. In stark juxtaposition, the flute duet of the protagonists' theme symbolizes their humanity, individuality, and the intimate, personal nature of their bonds and struggles. The interplay between the two flutes suggests dialogue, mutual understanding, and cooperation—the very qualities that drive the protagonists' fight against the monolithic Moebius. Thus, the musical motifs underscore the central conflict between the individual and the collective, freedom and control, that lies at the heart of Xenoblade 3's narrative.

Pretty good. I notice both models like using “almost” before stuff that’s not at all “almost” in these replies, but I digress. I’ll put this a hair over GPT-4: “grandeur” is a lot more accurate than “impersonal”, and the part about cooperation (between former enemies, in the game’s plot) is a good point for the flute. A weak pass.

Claude 3.5 Sonnet says:

The musical juxtaposition between the oppressive choir in "vs Moebius" and the intimate flute duet of the protagonists' theme underscores the core thematic conflict of Xenoblade Chronicles 3. The choir, with its imposing ecclesiastical connotations, represents the artificial order and false divinity imposed by Moebius - a system that subsumes individual voices into a controlled mass. In contrast, the flute duet symbolizes the organic, personal connection between Noah and Mio, whose melodies intertwine while maintaining their distinct voices, embodying the game's themes of harmony achieved through genuine connection rather than forced uniformity. The flute, being a wind instrument powered by breath, also reinforces the game's central motif of life's finite but meaningful nature, contrasting sharply with Moebius's sterile immortality.

Whoa. Very strong pass, and bonus points for including stuff I hadn’t thought of but that clearly ring true. Additional bonus points for being actually specific. It did miss the notion of individual Moebius members being amalgamations, but whatever. Literature is subjective.

Overall for this test, OpenAI’s models muddle through, going from pretty bad to pretty good, while Claude’s go from mediocre to excellent in a sudden phase change. Anthropic is the clear winner here.

Test Three: Abstract Algebra

I self-taught abstract algebra earlier this year, and both GPT-4 and Claude were a big help. But they also often got stuff comically wrong, especially specific questions I had that were at all “off the beaten path” of the most popular examples and theorems. This test is to see how much better my former tutors would do in their current incarnations.

Here’s the test:

Please list the elements of the Galois group for the polynomial x^3 + 3, and briefly list groups it’s isomorphic to.

What I’m looking for:

It’s got six elements, one for each permutation of the 3 complex cube roots of -3. It’s isomorphic to S3.

GPT-4 says that it’s got three elements in a cyclic group, and thus is isomorphic to Z3. Its reasoning is superficially close, but spotty. Worse, it’s close enough to coherent that if I were actually using it during my studies, I’d have come away a little more confused than I started. A fail.

GPT-4o gets it totally right, with solid and succinct reasoning. Strong pass.

GPT-o1-preview also gets it right, and does a better job explicitly listing all six elements. But 4o was pretty close to perfect already, so o1-preview mostly just doesn’t make things worse. Also strong pass.

Claude 3 Opus gets it exactly right, and is really succinct, like so:

The polynomial x^3 + 3 is irreducible over the rationals ℚ. Its splitting field is ℚ(ω, 3^(1/3)), where ω is a primitive cube root of unity.

The Galois group G of x^3 + 3 over ℚ permutes the roots:

α = 3^(1/3)

β = ω⋅3^(1/3)

γ = ω^2⋅3^(1/3)

The elements of G are:

Identity: (α → α, β → β, γ → γ)

(α → β, β → γ, γ → α)

(α → γ, β → α, γ → β)

(α → α, β → γ, γ → β)

(α → β, β → α, γ → γ)

(α → γ, β → β, γ → α)

|G| = 6, so G is isomorphic to:

S_3, the symmetric group on 3 letters

D_6, the dihedral group of order 6

Strong pass with bonus points for nice formatting and no excessive explaining.

Claude 3.5 Sonnet gets it wrong, falling into the same pitfall as GPT-4 and deciding the group has three elements! That’s a backslide for 3.5 Sonnet, and a clear fail.

For comparing Anthropic and OpenAI here, I think this test is more or less a draw. Claude 3 Opus did the best, but Anthropic’s flagship model regresses. If pressed I’d give the advantage to OpenAI, but I suspect if I tried a lot of moderately advanced abstract algebra questions, both labs would do better sometimes and worse others, with GPT-o1-preview coming out on top due to (I suspect) specific mathematics-related tuning.

Who Cares?

About my specific benchmarks? You probably shouldn’t very much. This post is more about showing my approach, and (hopefully) encouraging other people to make their own personalized benchmarks. If you want to know how good each new AI offering is, there’s nothing quite like loading up your own questions tailored to your own interests, and seeing how the LLMs stack up.

There is concrete evidence of this happening: there’s something called “canary strings”, i.e. “canary in a coal mine” - specific sequences of characters placed alongside stuff that AIs aren’t supposed to know about. So if an AI can be shown to know the canary string, they probably know the secret stuff, too. And indeed, at least one canary string from a major benchmark has been found.

I’m doing this as a consumer of OpenAI’s and Anthropic’s products, so I just use the chat interface, rather than the developer API.

Though I will note every model I’ve tested continues to be pretty bad at it.

In gaming, “meta” is a term that means, basically, “what’s commonly used in competitive play, and for what reasons”. So if a fire type Pokemon is dominant in the summer, some particularly splashy water type Pokemon might be ascendant by the fall.